Selfie's Reflections on Formal Verification for TLS 1.3: Largely Opaque

2019-04-11Selfie is a new attack on TLS 1.3 that was published last week by researchers Nir Drucker and Shay Gueron. The paper’s name is inspired by its core finding:

- In TLS 1.3, as in some previous versions of TLS, it’s possible to authenticate a session using a Pre-Shared Key, or PSK. A PSK is simply a 256-bit secret symmetric key that’s agreed upon beforehand by the two parties. Since only the Client and the Server know a specific PSK, it’s safe to assume that they can authenticate one another using it. We call that property mutual authentication.

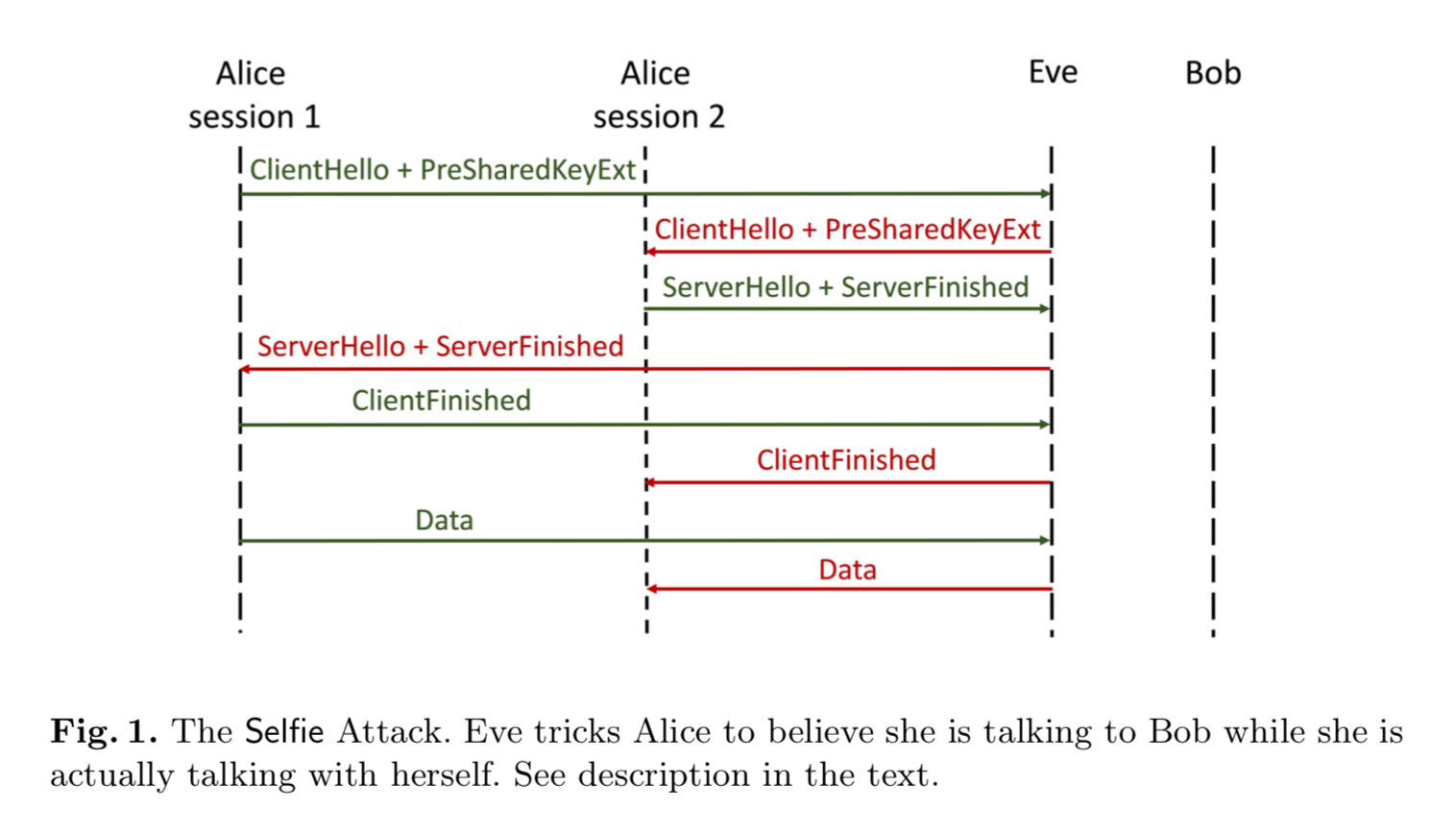

- If the Client and Server in a TLS 1.3 session use PSK authentication with a certain type of PSK, an attack appears which breaks mutual authentication: specifically, the Client could be led to believe that it’s talking to the Server, whereas it’s actually talking to itself. This is because both the Client and the Server are authenticated using the same exact PSK, which, in order for this attack to work, has to be one that neither of them specifically generated (i.e. an “external” PSK, which is a PSK that was distributed out-of-band to the Client and Server and for which the generation was not under the specific control of any party).

A Client talking to itself could be seen as having its own messages being “reflected” back at it, hence the name “Selfie”. Here’s a useful sequence diagram from the paper that illustrates what the attack looks like:

The Selfie authors then suggest three ways for preventing this kind of attack across all sorts of secure channel protocols that could potentially be affected. Their preferred way is to generate a unique identity for every principal, make sure that identity is known across the network, and then have each pair of principals mix their identities into each session they end up establishing. It’s not a cheap fix: guaranteeing identity uniqueness isn’t trivial, and authenticating identities, especially out-of-band, has long been a troublesome component of secure communication systems.

For TLS 1.3 specifically, however, their mitigations are easier to apply: the Selfie authors recommend using external PSKs only in conjunction with server certificates. A better solution, they continue, would also be to simply forbid the sharing of a specific PSK between more than one Client and Server. I’m personally pessimistic about how enforceable the latter recommendation would be in real-world deployments. But the former is fine.

Now, I’d like to get this out of the way before we continue: I would argue that Selfie has a very limited impact on the practical security of TLS 1.3 in a real-world setting, due simply to the fact that Clients are very rarely seen having fruitful TLS sessions in which they talk to themselves. Once the Client’s message is reflected back unto itself, the most likely thing that will happen is that the session data stream will be considered corrupt by the application layer, and the entire session will fall over.

However, Selfie remains a perfectly valid attack on TLS 1.3’s PSK-based mutual authentication logic. It’s fair to say that it should have been caught earlier by the TLS 1.3 WG and dealt with appropriately.

Formal verification efforts for TLS 1.3 didn’t catch Selfie…

At first glance, Selfie does indeed seem to fly in the face the unprecedented effort to formally verify TLS 1.3 as secure.

Formal verification has traditionally meant hand-written, game based proofs. But in recent years it has increasingly become a methodology where automated, tool-assisted verification is accomplished by describing protocol flows under either the symbolic model (e.g. using ProVerif/Tamarin) or the computational model (e.g. using CryptoVerif).

In the case of symbolic verification can allow for the rapid evaluation of real-world protocol flows against specific security queries under an active attacker (“is the authenticated key exchange forward secure?” “is the first message confidential?”). In the computational model, full game-based proofs can still be obtained, similarly to hand proofs, while being more easy to link to specific protocol flows across well-defined principals.

TLS 1.3 received formal verification attention of all sorts: hand-written game-based proofs, automated symbolic verification, computational model proofs, and even a gargantuan effort by Microsoft Research called Project Everest which aims to build an entirely functionally correct and formally verified HTTPS stack.

And yet in spite of all of this unprecedented effort to prove the security of TLS 1.3, Selfie still happened! As the Selfie authors point out in Section 6 of their paper:

The Selfie attack comes as a surprise, since TLS 1.3 is the first TLS version that was designed alongside with security proofs, e.g., the studies in {3, 5, 6, 7, 8, 9, 12, 13, 14}. This is even more surprising since the Selfie attack is actually a manifestation of a reflection attack that is already known in other contexts.

(Full disclosure: I’m a co-author on {3}, cited above.)

The Selfie authors then delve into existing work on formally verifying TLS 1.3 to try and find out why Selfie wasn’t spotted. In summary, they correctly conclude that the protocol flows that were envisaged by the formal verification efforts for TLS 1.3 weren’t comprehensive enough to catch the case where a Client uses TLS 1.3 with an external PSK and then proceeds to talk to itself for the entire session.

…because these formal verification efforts were geared towards eliminating more damaging classes of attacks.

Selfie is a legitimate and interesting attack on TLS 1.3. The Selfie paper is written with care and attention to detail. It’s a finding that deserves to be published.

But I think it’s important to explain why Selfie’s existence doesn’t meaningfully reflect on the status of the formal verification efforts for TLS 1.3.

Selfie is a finding with very limited practical implications and that relies on a protocol flow for TLS 1.3 (a Client talking to itself) that, in real-world usage, would almost certainly immediately lead to a corrupt data stream. When the formal modeling of TLS 1.3 was moving on with full steam, the security community was still reeling from the disclosure of protocol bugs and vulnerabilities that belong to entire classes of attacks that are more serious than the one which Selfie finds itself in.

Forget a Client being misled into talking to itself: the focus was on ruling out different classes of bugs that were comparatively critical in nature:

- Logjam, which hurried the radical reduction of supported cipher suites in TLS 1.3. This class of attacks was then subsequently ruled out using formal verification efforts.

- Triple Handshake, which led to significant changes to mutual authentication in TLS 1.3 that were then formally verified.

- SMACK, which inspired the radical reduction of the state machine space in TLS 1.3 from TLS 1.2, which was then formally verified.

- SLOTH, which led to the urgent removal of deprecated cryptographic primitives from TLS in TLS 1.3 as well as in other unrelated protocols.

- POODLE and Lucky13: attacks caused by weak authenticated encryption which were, again, modeled and formally analyzed in order to directly inform the design of TLS 1.3.

These are the sorts of disastrous attacks that formal verification for TLS 1.3 was paying attention to ruling out: total session compromise caused by ill-defined protocol state machines with a way-too-huge state space. Protocol failures caused by weak hash functions, Diffie-Hellman groups or long-term keys.

The list goes on. Every bullet point on that list was linked to a critical weakening in the real-world security of TLS, and this is the sort of dangerous stuff that formal verification was focusing on ruling out in TLS 1.3. Indeed, some of TLS 1.3’s formal verification efforts were able to rule out attacks such as Logjam or Triple Handshake even if TLS 1.3 was being run in parallel with TLS 1.2!

It’s easy to decide you’re justified in deriding formal verification efforts for TLS 1.3 when you’re not clear on what they were actually intended to rule out. Whenever a formal verification result is published, a few things are always clearly mentioned: the protocol flows being verified, the classes of attacks being checked for and ruled out, and the remaining protocol flows that are outside the scope of the accomplished verification effort.

Every one of the formal verification studies cited by the Selfie authors had this sort of diligent scoping written out for its formal analysis. None of them claim to rule out an attack like Selfie’s, because, given the much more serious classes of attacks the modeling was focused on, none of them produced a formal model for it. As such, Selfie’s appearance can’t really be held as a barometer by which to evaluate the accomplishments of TLS 1.3’s formal verification efforts.

Our models could still have been more comprehensive.

That being said, it’s still fair to recognize that, at least when it comes to our own formal verification efforts for TLS 1.3, we still could have produced models that were more comprehensive and that better captured the external PSK protocol flows. In our work, we modeled TLS 1.3 session flows in both the symbolic and computational model, using ProVerif and CryptoVerif. Here’s where we lacked precision sufficiently such that Selfie wasn’t detected:

- Our symbolic models were written such that mutual authentication via some PSK was considered “correct” if that PSK could establish mutual authenticated only between the Client and the Server to which it belonged. If it authenticated the Client to the Server, that was “correct”. But if it authenticated the Client to the Client, that was considered “correct” too.

- Our computational models were written such that they exclusively capture protocol flows where each PSK would be shared only between one Client and one Server, thereby inadvertently implementing a mitigation for Selfie that did not exist in the actual TLS 1.3 specification that we were evaluating.

While it’s true that formal verification for TLS 1.3 would have done better to be comprehensive enough to rule out Selfie, it shouldn’t come as a huge surprise that it didn’t. In fact, one could even argue that formal verification is the reason why attacks on TLS 1.3 protocol flows have been restricted to scenarios like Selfie, i.e. flows that would simply never occur in a real-world setting. At least when it came to our own research, however, we were focused on making sure that the catastrophic bugs that have plagued TLS 1.2 and prior can be, this time, hopefully steered clear from.