Why StopCOVID Fails as a Privacy-Preserving Design

2020-05-27This analysis is written in collaboration with Anne Weine and Benjamin Lipp.

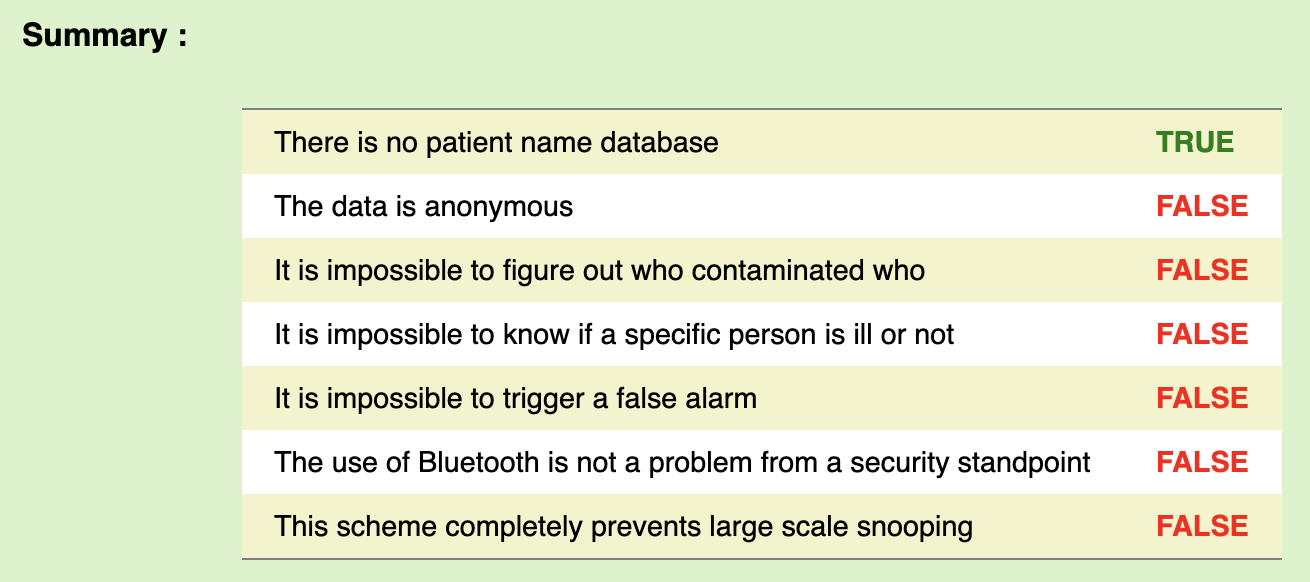

France’s StopCOVID application will be considered for nation-wide deployment today by French lawmakers, having recently obtained an opinion from the French Commission Nationale de l’Informatique et des Libertés (CNIL), which contained only light-handed criticism and suggestions for the application’s transparency measures. This has been falsely cited by the ROBERT/StopCOVID developers as constituting some kind of wide-ranging approval from CNIL, as I will further show later in this post.

Having closely followed the development of ROBERT, the application’s underlying protocol as well as having recently reviewed the source code for the StopCOVID application itself, I am writing this post in order to justify my position, which is that StopCOVID is not a privacy-preserving technology and that it is strictly unfit for deployment on the national level. In fact, I would go further to state that the lapses in StopCOVID’s design are so serious that for it to be considered for deployment on a national scale may merit an independent scientific ethics review. I will justify this position with the arguments below.

Compromising a Single Server Deanonymizes All of France’s StopCOVID Usage

From the outset, ROBERT’s design indicated that the entire “privacy-preserving” contact tracing system would work by having a single centralized server generate and issue pseudonyms to all StopCOVID users. On April 18th, I asked regarding the fitness of this type of design for a supposedly privacy-preserving application. Namely, given that the ROBERT specification stated the following:

Anonymity of users from a central authority: The central authority should not be able to learn information about the identities or locations of the participating users, whether diagnosed as COVID-positive or not.

I asked, given that all pseudonyms are generated from the server after a REST API request from the user’s device/IP address:

In short, all of ROBERT is built on trust from central authorities and the assumption that they will behave honestly and be impervious to third-party compromise. I am unable to determine how this is a strong, or even serious and realistic approach to real user privacy. Could you please justify how this protocol achieves any privacy from authorities, and how the current model of assuming that all authorities are:

- Completely honest,

- Impervious to server/back-end compromise,

- Impervious to any transport-layer compromise or impersonation,

…is in any way realistic or something that can be taken seriously as a privacy-preserving protocol? Given the level of trust assurances that you are attributing to authorities, and given that authorities are responsible for generating, storing and communicating all pseudonyms directly to users to their devices, what security property is actually achieved in ROBERT in terms of pseudonymity between authorities and users?

Twelve days later, I received the following response from the ROBERT team:

In ROBERT scheme v1.0, the server is indeed responsible for generating, storing and communicating pseudonyms. This is done for two main reasons:

A user can only perform an Exposure Status Request phase corresponding to its pseudonyms. Indeed, the pseudonyms are linked to a secret key K_A on the server, and the server verifies that the request originates from the owner of the secret key K_A before answering. Therefore, an attacker cannot query the server for the exposure status of another user.

The pseudonyms of infected users are not exposed to other users. Since the computation of exposure status is done on the server, there is no need to publish pseudonyms of infected users as it may be the case with other solutions.

About the pseudonymity of users with regard to the server. The server does not store any other identifiers than the one included in the IDTable database (see section 3.2. Application Registration (Server Side)). During the Application Registration and the Exposure Status Request, the app may expose network identifiers that could compromise her real identity. To mitigate this risk, solutions like Mixnet or proxies could be used (as suggested in Section 6, footnote 11).

More generally, concerning the “honest but curious” assumption: this is a key assumption for the ROBERT v1.0 design as you noticed. It is not our responsibility, as privacy researchers, to judge whether or not this assumption is valid.

This topic could be discussed for hours, clearly. However, when looking at the “avis CNIL sur le projet d’application mobile StopCovid”, we have the feeling this is a reasonable assumption.

The above response makes two outrageous claims:

- It claims that the designers of a privacy-preserving system bear no responsibility to judge whether or not the assumptions they make about their own design, which they control over their own use case, which they can freely limit or expand, are valid or suitable. This is an abuse of a tenet that is reserved for situations where privacy researchers have to evaluate third-party systems, and it is yet used to effectively say that the designers of from-scratch privacy-preserving systems bear no responsibility for any of their privacy assumptions.

- The final sentence of the response links to this CNIL report, which, aside from being a preliminary opinion that is far from completely approbatory of the ROBERT/StopCOVID design, has no bearing inside a scientific or technical discussion and therefore constitutes an appeal to authority.

Nevertheless, this fundamental tenet of the ROBERT design survived, and the ROBERT/StopCOVID team saw fit to implement it verbatim into the StopCOVID application stack.

StopCOVID/ROBERT’s Centralized Database Architecture

During today’s review of the StopCOVID/ROBERT source code, it became apparent that the StopCOVID server operated a singular MongoDB database which stored the following user state for each StopCOVID user:

// robert-server-database/src/main/java/fr/gouv/stopc/robertserver/database/model/Registration.java

public class Registration {

@Id

@ToString.Exclude

private byte[] permanentIdentifier;

@ToString.Exclude

private byte[] sharedKey;

private boolean isNotified;

private boolean atRisk;

private int lastStatusRequestEpoch;

private int lastNotificationEpoch;

private List<EpochExposition> exposedEpochs;

}

The creation and storage of this database entry is triggered by a REST API call that the StopCOVID client application makes to the server upon device registration. As myself and others had noted weeks before to the StopCOVID/ROBERT team, this means that a compromised, malicious or even simply an “honest-but-curious” (StopCOVID’s own threat model) server could correlate IP addresses and user agents (and therefore devices) to their state entries in the singular MongoDB database.

This issue was pointed out by myself but also independently by Olivier Blazy. While the ROBERT team admitted to the issue completely, their response was restricted to a (actually false) comparison with a competing standard, DP-3T, and by offering the usage of mix networks as a potential solution, which is unlikely to be suitable for mass deployment and which would likely constitute a design addition more complex than the totality of the current ROBERT design itself. Aside from this discussion the issue was not taken seriously by the ROBERT team as a fundamental flaw in centralized proximity-tracing design.

Section 8 of the ROBERT design document outlines how the system can federate with other states:

In order for our system to be an effective tool, it must operate across neighboring states. This is especially truein the case of Europe, where freedom of movement is a core value.We therefore propose the use of a distributed, federated architecture where countries may operate theirown back-ends and develop their own Apps.

This system could be used within France to avoid having one single database server: Each region or department health service could run a server, and users sign up with the server of their home region/department (or be assigned randomly, or choose themselves). The federation between them could work as described in Section 8.

Window of Compromise and Recovered Data

In reviewing the source code, it was determined that StopCOVID client devices check in via the REST API at least once every 24 hours, with the timer beginning at the moment of registration. Given that users are more likely to register during French waking hours, this means that an attacker with a window of compromise into the server for a single working day could completely and irreversibly deanonymize virtually all StopCOVID users, by correlating the IP addresses and user agents that contact the server through its REST API to the permanent identifiers in the MongoDB database. We repeat that this is completely in line with what the “honest-but-curious” adversary considered by the StopCOVID/ROBERT team could do.

Again, the StopCOVID/ROBERT team was aware of this issue, chose not to address it, and instead cited a CNIL report which did not discuss this issue at all. In fact it is unclear whether the CNIL did at any point seriously investigate this fundamental design flaw.

Potential for Undetectable Abuse by Authorities

The StopCOVID/ROBERT team cites the publication of server source code as a cornerstone of its transparency initiative. However, given that there are no attestations regarding the integrity of the code running on the server side, it is strictly impossible for third parties to determine if the code is not modified anywhere down the line in order to facilitate the correlation of IP address or user agent information with the permanent identifiers stored in the server’s MongoDB database. We again state that such an attack would be consistent with ROBERT’s adopted threat model of an “honest-but-curious” attacker. This flaw could apply to other proximity-tracing designs, but is significantly magnified in centralized designs that depend more on the server.

StopCOVID Application Limitations

Aside from the fundamental issue discussed above, which alone should discredit the StopCOVID/ROBERT standard entirely, the client application in its current state suffers from a variety of issues that significantly restrict its efficacy and viability.

Beta Released Without Confidentiality Policy

The StopCOVID beta was released with Lorem Ipsum filler text instead of a privacy policy. While this is an easy-to-remedy problem and while it does not reflect on the technical or scientific sanity of the StopCOVID/ROBERT stack, it remains a pessimistic indicator with regards to the level of attention given to the system more generally.

Bluetooth Cannot Reliably Measure Physical Distance

A key premise of the StopCOVID application is that it is capable to, via Bluetooth, reliably measure that contact events have occurred with a distance inferior to 1 meter. This is impossible for a number of reasons:

- There is no such specified functionality within the Bluetooth specification, so any attempt to arrive at such a measurement would have to be done using ad-hoc methods.

- Such ad-hoc methods are unlikely to work because of a number of restrictive factors: first, different smartphone devices ship with different Bluetooth chipsets of varying quality. These different Bluetooth chipsets support different Bluetooth protocol versions, with different maximum ranges. Finally, these chipsets are hooked to antennas of different supported ranges. In summary, this means that a “strong signal” might be only obtained between device A and B at a range of five meters or less, whereas device A and device C may be able to maintain a signal rated at the same strength level easily even at a range of 10 meters or greater.

Paul-Olivier Dehaye has written an excellent technical deep-dive that goes into further detail regarding why inferring distance from Bluetooth signal strength is unlikely to work reliably.

As such, StopCOVID is making an impossible promise to its users and this promise should be retracted.

StopCOVID’s Bluetooth Functional Requirements Cannot Be Reliably Satisfied on iOS

It is impossible to obtain reliable background functionality of the Bluetooth API on iOS devices in such a way that is suitable for the StopCOVID use case. Other contact tracing applications have already faced this problem and have had to resolve it with extreme measures that are unfit for daily use, such as relying on constant pings from Android devices which may or may not be nearby, showing a notification every 30 minutes to the user asking them to foreground the application, and so on.

StopCOVID Requires Fine-Grained Location Permissions and Administrative Bluetooth Permissions on Android

The StopCOVID application requires that users grant fine-grained (GPS) permissions as well as administrative-level Bluetooth permissions, the latter which allow the StopCOVID application to control the phone’s entire Bluetooth stack, including turning Bluetooth on or off, interfering with the Bluetooth connections made by other applications, and so on, as documented here.

Critical Vulnerabilities in the Bluetooth Standard

The upcoming CVE-2020-12856 vulnerability, which is currently embargoed, risks having a critical security impact on all Bluetooth communications made by proximity tracing applications, except those rolled out by Google and Apple’s internal APIs (since those already have mitigations for this attack). The discoverers of the vulnerability have already written a report showing how it impacts proximity-tracing applications. It is therefore irresponsible to roll out any third-party contact tracing application before the embargo for this issue is lifted in 17 days and a patch is rolled out. And yet, the planned release date for StopCOVID is this very weekend, in the event that it gets approved by French lawmakers.

Usage of the Google reCaptcha API

StopCOVID’s Android and iOS applications both make use of the Google reCaptcha service in order to limit spam and automated signups, thereby sending identifying information to Google, in the form of IP addresses and user agents, during the onboarding of every device.

StopCOVID Is Unfit for National Adoption

Because of the issues discussed above, it is clear that not only is StopCOVID unfit for national adoption, but that it also does not even qualify as a privacy-preserving design as it pretends to. It is therefore surprising to see how this standard was championed by Inria, the French national computer science laboratory, especially given that Inria’s own researchers published a wide-ranging criticism of StopCOVID/ROBERT’s design, as well as competing designs. This critique discusses a multitude of unaddressed flaws in all contact-tracing designs, almost all of which have gone completely unaddressed by StopCOVID/ROBERT despite the criticism emanating from the very same research laboratory:

CNIL’s Analysis of StopCOVID

Similarly, CNIL’s analyses of StopCOVID (1, 2), which have been cited as approvals by the StopCOVID/ROBERT team, are revealed, after inspection, to be nothing of the sort. The statements made by CNIL so far do not take into account the vast majority of the flaws discussed in this post, limiting their statements on StopCOVID/ROBERT to the following:

- That subscription to StopCOVID must be strictly voluntary,

- That no negative implications must be attached to the lack of StopCOVID usage (such as being banned from using public transport),

- That care must be taken to fulfill GDPR requirements,

- That StopCOVID must have strong privacy and especially pseudonymity guarantees (which are not ultimately provided, as discussed earlier in this post),

- That StopCOVID must not ask for geolocation permissions from user devices (which it ends up doing, as shown earlier in this post).

It is unfortunate that a pair of reserved and highly specific CNIL statements are being thrown around by the StopCOVID/ROBERT team in order to shut down scientific discussions, as if an appeal to authority was valid in such contexts. It is doubly unfortunate because the CNIL statements do not even come close to clearing StopCOVID and to lending it credence. As a matter of fact, it seems clear that the CNIL has not had any opportunity to update its opinion now that the StopCOVID source code is released, and I wonder if this will happen given the issues discussed in this post among, inevitably, separate concerns raised by other researchers.

StopCOVID’s Failures as a Privacy-Preserving Design

Different stakeholders, such as CNIL as well as the research community, are united in expressing that the effectiveness of such an application cannot even be ascertained before its release. Given that we have no barometer for ultimate effectiveness, it seems to be a great error to release such a fundamentally flawed design as a government-recommended digital measure across all of France.

Given the discussion above, it is clear that the adoption of StopCOVID/ROBERT on a national scale in France would constitute a permanent dilution of what it is that qualifies as a privacy-preserving design meant for national adoption in periods of national crisis.